There are only two hard things in Computer Science: cache invalidation and naming things.

— Phil Karlton

Since functional programming has reached the world of Serious Real Projects, a bunch of new buzzwords started to creep into the language of software engineers. Some of them are: monads, tail recursion, immutability, purity or currying. One of them is also memoization. This term defines the ability to cache results of calls for pure functions which don’t have side effects and always return the same result for given arguments. It is not something special, but we must be aware that with functional paradigms affecting our codebases with bigger and bigger impact, pure functions are becoming a very common thing. Programming languages and platforms themselves don’t offer too many elegant ways to apply memoization to such functions, so let’s see what options do we have with external libraries.

Memoization is just a kind of caching and there are a lot of ways to cache stuff. I’m not going to dive into aspect-oriented caching with magic annotations offered by some frameworks. Such approach is of course powerful and useful in some contextes, but let us focus on some simpler cases, where we don’t want to pull a framework.

Manual approach

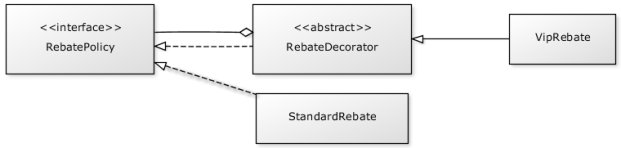

One pretty straightforward method is to use the decorator pattern and wrap our function with a decorator object which stores values and manages the hideous process of cache invalidation. This painful job can be delegated to Guava with its slick API. First, let’s take a look at an example of some expensive function that might need caching:

class GraphBuilder {

def creatGraph(elementCount: Int): Graph = {

someExpensiveCode()

}

}

To do our “manual AOP” and “weave in” the aspect of caching, let’s extract a trait and create a decorator:

trait GraphBuilder {

def createGraph(elementCount: Int): Graph

}

class ExpensiveGraphBuilder extends GraphBuilder {

override def creatGraph(elementCount: Int): Graph = {

someExpensiveCode()

}

}

class CachedGraphBuilder(inner: GraphBuilder) extends GraphBuilder {

val graphs = CacheBuilder.newBuilder()

.maximumSize(10000)

.expireAfter(10, TimeUnit.MINUTES)

.build(

new CacheLoader[Int, Graph]() {

def load(elementCount: Int) {

inner.createGraph(elementCount);

}

})

override def createGraph(elementCount: Int): Graph = {

// hits the cache or calls the inner builder

graphs.get(elementCount)

}

}

Then, when we wire our dependencies, we can instantiate the builder as

val graphBuilder = new CachedGraphBuilder(new ExpensiveGraphBuilder())

It’s a decent approach which keeps our code compilant with the open-closed principle (if you don’t count trait extraction). However, some functions are part of traits or objects and cannot be decorated in such way, also it’s annoying to write the same boilerprate over and over again, so if you find yourself tired of it, it’s time to use a framework try something leaner.

Generating boilerplate in compilation time with macro annotations

MacMemo is a simple library that I implemented as an experiment. It introduces an annotation which can be placed over a function definition. When the compiler runs, it parses the annotation and generates boilerplate around function body to instantiate Guava cache and use it. Long story short, the whole above code becomes:

import com.softwaremill.macmemo.memoize

class GraphBuilder {

@memoize(maxSize = 20000, expiresAfter = 2 hours)

def creatGraph(elementCount: Int): Graph = {

someExpensiveCode()

}

}

We get a very brief solution to ensure that our function results get cached with desired details of invalidation. The macro will generate all necessary boilerplate code and insert it directly into the createGraph() method, wrapping its real code with cache calls.

If you like MacMemo, please star it on GitHub 🙂

Custom cache providers

What if you don’t want Guava? A clever recent contribution of Marcin Kubala extends MacMemo with possibility to define custom providers, so you can easily write your own extension and use memcached, EhCache or whatever you like.

You can achieve this by bringing appropriate implicit MemoCacheBuilder instance into memoized class scope (the scope of the method definition, not it’s usage, e.g: companion object, implicit val within class or an explicit imports available in scope of class definition).

The MemoCacheBuilder trait has following definition:

case class MemoizeParams(maxSize: Long, expiresAfterMillis: Long, concurrencyLevel: Option[Int])

trait MemoCacheBuilder {

def build[V <: Object](bucketId: String, params: MemoizeParams): Cache[V]

}

trait Cache[V] {

def get(key: List[Any], computeValue: => V): V

}

MemoizeParams is a config class instantiated basing on parameters passed to the annotation. The buckedId argument can be used to identify unique key for your annotated method name + enclosing type path (the Guava-based implementation doesn’t use this key but you may find it handy in your implementation). Your MemoCacheBuilder should be responsible for creating an instance of Cache which can take a list of method arguments and return a cached result. This cache instance will be instantiated exactly once per each object of class with annotated method.

How exactly can you bring your builder into the right scope? Here’s an example of one simple way:

class ClassWithMemo {

implicit val cacheProvider = new MyCustomCacheBuilder

@memoize(2, 5 days)

def someMethod(param: Int) = {

someExpensiveCode()

}

}

The builder can be as well represented as an object, so we won’t need the ‘new’ keyword here. For more, check Marcin’s examples on GitHub.

Last, but not the least, MacMemo allows to disable its caching globally with system property, so you can easily switch it off for test purposes.